Hello readers of cloudadvisors.net, my name is Kevin Kekule and I’m an intern at Soeldner Consult GmbH. My objective is to explore Tanzu Kubernetes. I’m pleased to start with my series of articles on Tanzu Kubernetes, where I’ll dive gradually deeper into this topic till I’ll install Kubeflow in different ways.

After this short blogpost you’ll understand what the different components of Workload Management are, in respect to the default Kubernetes. I’ll show you a high level overview of what Tanzu Kubernetes is and what it is not.

Tanzu and Supervisor Cluster and Workload Management?

What is the Supervisor Cluster and where are its pods versus what is the TKG cluster and where are its pods

In Tanzu Kubernetes the user works in a deeper level of Kubernetes. Nothing more nothing less.

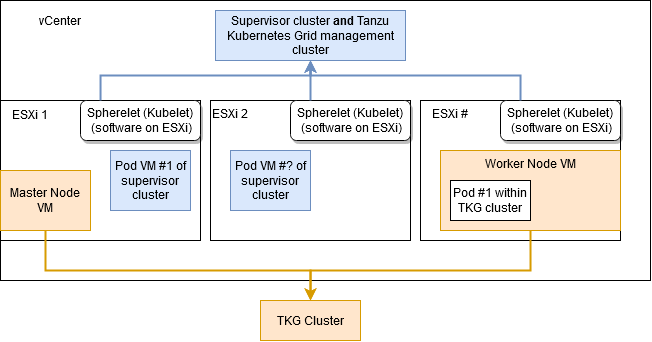

The highest level in vCenter 7 is the Supervisor Cluster, which is setup via vSphere Web Client. This is known by the name Workload Management. The ESXi hosts are configured as worker nodes. Three VMs are used as master nodes. The master nodes don’t need as much ressources as the worker nodes, therefore the VMs. Untainting a master to schedule pods onto has it’s own drawbacks. For now you can ignore these VMs and concentrate on the worker nodes i.e. the ESXi hosts

All the necessary parts are installed, e.g. Spherelet (Kubelet + extra functionality), etcd, api-server, kube-proxy. Tanzu Kubernetes Grid (TKG) is later configured to use this supervisor cluster as the TKG management-cluster. TKG functionality is provided by the Supervisor Cluster, which is installed by the Workload Management.

Pods are run as VMs on these ESXi hosts, even in different namespaces.

A Tanzu Cluster

Utilising TKG for the creation of a Kubernetes cluster will power up multiple VMs which are new master and worker nodes. This new cluster is bound to a namespace, but is not realised as multiple pods.

Firing up pods in this new TKG cluster does work differently. Kubernetes behaves in the known default behaviour, docker is utilised for starting pods within these VMs.

Goals of this blogpost series

The following list may or may not change slightly but currently are planned for the future

- Setting up Workload Management and some help when it’s not working

- kubectl + vsphere cli plugin, firing up the first pods in the Supervisor Cluster

- creation of TKG Clusters within namespaces; first pods in the deeper levels of Workload Management

- installation of kubeflow without Docker Harbor but integration into the domain controller

- utilising the vMLP fling for kubeflow with Docker Harbor

Recent Comments