3.1 Introduction

Designing a vRealize Automation environment is a challenging task. This is due to its underlying architecture, but also to the demand to scale the solution in order to meet the needs of environments with thousands of virtual machines. In addition, a variety of business requirements must be met. Many requirements can be met out-of-the box, but others require a thoughtful design before starting the implementation.

This chapter starts with a short overview of design principles and challenges and then will cover how to design a vRealize Automation environment.

3.2 Approaching the design and architecture

When customers begin the deployment of a vRealize Automation environment, they often struggle to find an adequate approach.

| Fig. 1: Stages in developing a vRealize Automation design |

In general, for any project, you should begin by gathering the requirements and doing an assessment. Having identified the business and technical requirements helps you to identify which components of vRealize Automation should be deployed. Similar to other projects, the design process can be divided into different stages, as shown in Fig. 1.

3.2.1 Phase I: Requirements gathering and assessment

It is really difficult to build a cloud infrastructure if you don’t know the business needs and their corresponding requirements. Therefore, at the beginning of the design stage, it is crucial to engage with the business process and find out the requirements by involving the different stakeholders. Sometimes the stakeholders engaged in the project only have vague understanding of a cloud management platform. In that case, it is a good idea to set up a proof of concept (PoC) first. This helps to understanding technical issues and is extremely valuable in gathering further feedback to ensure you have solid planning information. Other approaches in getting feedback involve:

- One-on-one meetings with IT leaders and top management stakeholders.

- Team meetings with the current team that is responsible for managing technical operations.

- Prototyping, initial engagement surveys, use cases or brainstorming.

In many companies, there are common drivers for implementing a cloud infrastructure. First, testers or developers are in need to rapidly deploy systems. Such systems often have a short life span and should be isolated from the production environment. If users have to wait until a traditional IT support system provisions the requested resources, a significant amount of time is spent on waiting which leads to slowing down the development. Second, many companies want to establish an IT service broker (hence offering services to their customers). While end-users could request resources from public cloud providers on their own, this is often not desired, because this would frequently circumvent existing governance and compliance rules. Using the service catalogue feature of vRealize Automation helps to meet these goals, while being able to control costs and utilization of the cloud infrastructure. Last but not least, many companies are trying to build up their own private cloud in order to be able to supply IT resources in the same manner that a public cloud provider does.

3.2.2 Phase II: Analysis

Once you have gathered details of the requirements from your stakeholders, you should analyse them before you start with the planning or the design phase. This involves identifying the services to be deployed. For example, let’s say that the production environment shall offer a Linux operating system provision in the first stage and all the deployed virtual machine must somehow be integrated into the customer’s environment (for example, they should be registered in a Puppet environment or a have automatic patching enabled). Another important aspect is capacity planning. It is crucial to know how many virtual machines (and what kind of virtual machines) will be deployed in order to exactly estimate the hardware requirements of the vRealize Automation deployment. Other questions involve:

- Are there different kind of workloads on different platforms?

- Do you have to meet certain compliance requirements?

- What kind of end-users are using the self-service portal? How you want to group them?

- Is there need for multi-tenancy? Can different tenants share the same hardware or do they need to be completely isolated from each other?

- Is there any tool that must be integrated into the provisioning process? For example, many companies use configuration management tools like Puppet or Chef.

- How is billing done?

- What kind of DNS Server are you using for your workload?

- What other tools must be integrated (for example antivirus, asset management, CMDB)?

3.2.3 Phase III: Planning

After having gathered all the required information, we can begin with the planning phase. Planning often does not only involve vRealize Automation issues, but also the underlying storage, networking or virtual environment. In short, the planning should also cover the following aspects:

- Networking planning

- Storage planning

- ESXi hosts planning

- Cluster planning

- High-availability planning

- Capacity planning

- VM configuration

- Geolocation planning

3.2.4 Phase IV: Design

Once the requirements engineering, analysis and planning have been done, we can start with the design document. Without any doubts, this is the stage where the most time is being spent on and certainly the toughest one. The design can be roughly divided into the underlying cloud infrastructure design and the design of vRealize Automation itself. The most important deliverables of the design phase are the conceptual design, the logical design and the physical design. There is quite often a misunderstanding about these design documents, so the most important characteristics should be outlined.

First of all, you should create a conceptual design. It is only based on the business requirements and does not contain any information about the implementation. The conceptual design can then be used to give a basic overview to high-level corporate executives. In the case of vRealize Automation, typically business requirements mentioned in the conceptual design are:

- Providing Single sign-on authentication.

- Setting up a dedicated network for each business group.

- The need to deploy load-balancer as a service.

- Setting up an internal IP address management system.

The logical design contains more details and establishes a high-level guidance on the implementation. It includes high-level guidance on the implementation, but still does not include any implementation details. As an example, a logical design of a vRealize Automation environment could characterize the following business requirements:

- Each department will be mapped to a business group for the provisioning of no more than 50 virtual machine. All business groups are isolated from each other.

- Networks can be internal or external. Machines provisioned to internal networks will have private IP addresses, while machines deployed to an external network will obtain a public IP address.

- Business group managers are allowed to deploy load balancers to the network and to set up firewall rules that are based on NSX security groups.

Finally, the physical design includes the implementation details and is based on the logical design. For example, when setting up a vRealize Automation infrastructure, you would have to specify – amongst others – the following configuration items:

- Number and size (CPU and memory) of each vRealize Automation component.

- General network design including information about VLAN configuration, routing, gateways, DNS and DHCP servers.

3.3 Design elements

3.3.1 Cloud infrastructure design

In contrast to many other VMware solutions, the design phase of a cloud management platform solution is more complex than others, because vRealize Automation is not a stand-alone product, but must be integrated in an existing virtual environment. Based on the input of the planning phase, we have to determine whether a deployment for a small, medium or big environment is being planned. Usually, the following architecture design documents or artefacts should be created:

- VMware vSphere management layer design

- Network layer design

- Storage layer design

- Sizing and scaling capability design

The design document should also cover the following subcomponents:

- Readiness

- Performance

- Manageability

- Assumptions

- Constraints

- Risks & implications

- Security

- Recovery

As vRealize Automation will usually be deployed on top of an existing virtual infrastructure, it is obvious that there is good deal of work to be done on creating the design and on the implementation of that underlying infrastructure. There are many books and white papers which cover that topic, so we will not go into this any further.

3.3.2 vRealize Automation design

For vRealize Automation, the following design documents are the most important ones:

- Service catalogue

- Infrastructure-as-a-Service offerings

- Advanced Service Designer

- vRealize Business integration and service costing

- Capacity and performance monitoring

- Orchestrator workflows and possible third party integrations

- NSX integration

Let’s shortly go through each of these elements.

By means of the service catalogue, organizations can create and manage catalogues of IT services that are approved for use on vRealize Automation. These IT services can include everything ranging from virtual machines, servers, software, and databases to complete multi-tier application architectures. The service catalogue helps you to centrally manage commonly deployed IT services and helps you to achieve consistent governance and meet your compliance requirements. Last but most importantly, users will be able to quickly deploy only the approved IT services they need. When setting up the design document for the service catalogue, we have to consider how to categorize the published catalogue items into services, decide who is entitled to perform which actions on the items and ensure that the catalogue items are properly published.

The Infrastructure-as-a-Service module offers a model to provision servers and desktops across physical, virtual and cloud environments. vRealize Automation abstracts from the underlying technologies and provides an abstract and consistent model to for deploying IaaS resources. Besides being able to deploy to the aforementioned environments, vRealize Automation centrally governs the lifecycle of the deployed resources. Once again, there is a lot of work required to design the IaaS part. The vRealize Automation IaaS model has to be somehow aligned with the underlying infrastructure, which includes defining compute resources, fabric groups, reservations and blueprints (just to mention some of the components) in vRealize Automation.

The Advanced Service Designer helps to implement and publish day-2 operations on provisioned resources and integrate XaaS workflows based on vRealize Orchestrator. Similarly to the IaaS services, a lot of components have to be designed including service blueprints, resource mapping and actions.

Costing also requires a thorough design. Basically, vRealize Business helps you to identify your cloud infrastructure costs and to map these costs to individual service offerings. However, a consistent pricing and chargeback mechanism is a key requirement in many organizations. This also involves setting up reporting and taking service offerings variations into account. As an example, just think about letting the end user choose from different options like backup, SLA level or monitoring integration. All of these options can lead to different prices – even it is the same virtual machine image that is being deployed.

Monitoring and capacity management must also be properly designed. From an operational point of view, virtualization transforms the IT infrastructure team from being a system builder to being a service provider. The application team no longer owns the physical infrastructure, instead, it is now a shared infrastructure. This creates a two-tier capacity management. At the VM level, capacity management is done by the application team. At the physical infrastructure level, the infrastructure team must take care of capacity management.

With vRealize Automation, deployed virtual machines will usually not be created within a vacuum, but must somehow be integrated in an existing environment. A typical integration encompasses the installation of an antivirus software or a monitoring agent, setting up the machine as a Puppet node or joining Active Directory. Some of these components can be configured out-of-the-box, while others can be installed via vRealize Orchestrator. Hence, a planning is needed as well.

Virtual machines deployed by vRealize Automation need network connectivity as well. For many companies it might be sufficient to deploy machines into existing networks (or just create a bunch of networks dedicated to vRealize Automation beforehand). However, sometimes there is a need for a sophisticated network design based on the principles of micro-segmentation if you want to offer advanced services like load balancers to your customers. In that case NSX can help out.

As you can see, there is a lot of work needed for designing these components. In the remainder of this chapter we will cover the architecture of the vRealize Automation environment. In the subsequent chapters, we will also cover the other components.

3.4 Design of a vRealize Automation environment

As discussed, building a solid solution environment requires strong planning and focus. In contrast to other VMware products, the deployment of vRealize Automation is quite cumbersome (even though the new installation wizard relieves a lot of the pain from the administrator now).

3.4.1 Cluster configuration

We have to consider two kinds of virtual machines in a vRealize Automation environment:

- Virtual machines that have been deployed for end users, in other words compute VMs / production VMs that actually run the business critical workload.

- Virtual machines that make up the management infrastructure such as Active Directory, vSphere, Monitoring, SQL Server and so on.

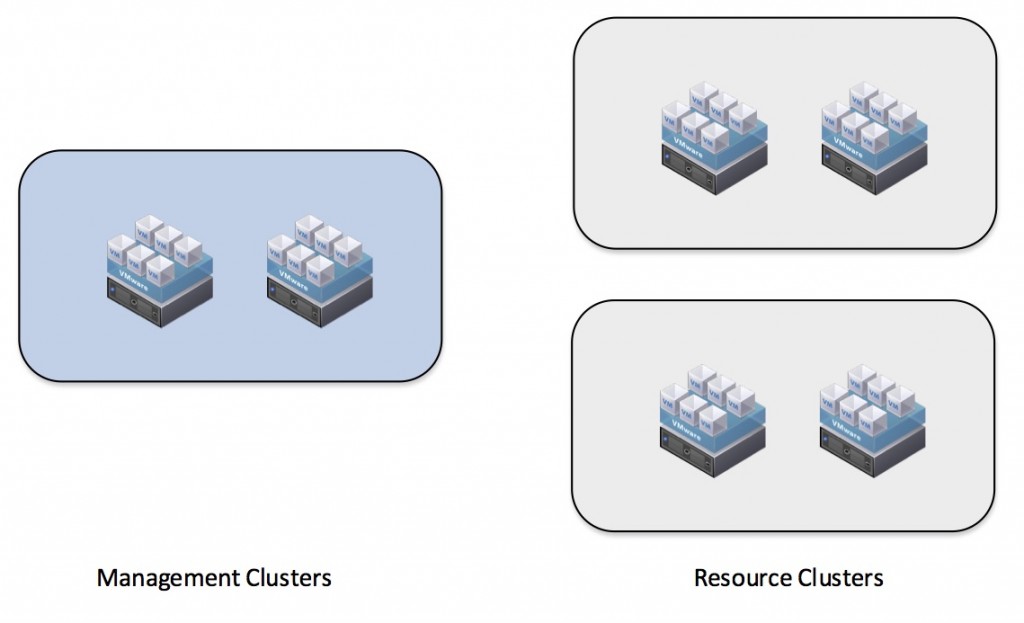

| Fig. 2: vRealize Automation clusters |

Fig. 2 shows the high-level logical architecture for such a cluster recommendation. The vSphere resources are organized and separated into:

- A management cluster containing all core components and services to run the cloud.

- One or more resource clusters that represent dedicated resources for the cloud consumption. All the clusters of ESXi hosts can be managed by a vCenter server.

There are several reasons for the use of dedicated clusters for the management and for the deployed resources. First, due to the separation, there is increased security and a higher isolation of the management workloads. Second, resource and management workloads do not compete for resources, thus causing less contention. Third, disaster recovery and business continuity will be increased. Also, cloud resources can be consistently and transparently managed, carved up or scaled horizontally. Last, having all the management components strictly contained in a relatively small and manageable management cluster facilitates quicker troubleshooting and problem resolution.

The amount of resource clusters depends on different criteria. First, it depends on the kinds of virtual machines and hence the workload to be provisioned. If you are setting up different service tiers (for example service tier 1 for critical applications, service tier 2 for normal productive workloads, service tier 3 for testing), it is recommended to create a dedicated cluster for each of the service tiers. Another use case for having more than one resource cluster would be the requirement to provision to different locations.

3.4.2 Hardware requirements

We have already discovered that a vRealize Automation installation consists of different virtual machines and components. While in small environments some of these components can be installed on one node, in a larger environment (for high-availability and scaling reasons), it makes sense to distribute them on different nodes. Tab. 1 outlines these roles and the corresponding hardware requirements.

| Server role | Small environment | Large environment |

| vRealize Automation Appliance | CPU: 2 vCPU

RAM: 12 GB Disk: 60 GB Network: 1 GB/s |

CPU: 4 vCPU

RAM: 18 GB Disk: 60 GB Network: 1 GB/s |

| IaaS Web server

|

CPU: 2 vCPU

RAM: 2 GB Disk: 30 GB Network: 1 GB/s |

CPU: 2 vCPU

RAM: 8 GB Disk: 30 GB Network: 1 GB/s |

| Infrastructure Manager Server | CPU: 2 vCPU

RAM: 2 GB Disk: 30 GB Network: 1 GB/s |

CPU: 2 vCPU

RAM: 8 GB Disk: 30 GB Network: 1 GB/s |

| DEM Server | CPU: 2 vCPU

RAM: 2 GB Disk: 30 GB Network: 1 GB/s |

CPU: 2 vCPU

RAM: 8 GB Disk: 30 GB Network: 1 GB/s |

| Proxy Server | CPU: 2 vCPU

RAM: 2 GB Disk: 30 GB Network: 1 GB/s |

Same as required hardware specification |

| MS SQL Server | CPU: 2 vCPU

RAM: 8 GB Disk: 20 GB Network: 1 GB/s |

CPU: 8 vCPU

RAM: 16 GB Disk: 80 GB Network: 1 GB/s |

| vRealize Orchestrator | CPU: 2 vCPU

RAM: 3 GB Disk: 30 GB Network: 1 GB/s |

Same as required hardware specification |

| vRealize Business Appliance | CPU: 2 vCPU

RAM: 4 GB Disk: 50 GB Network: 1 GB/s |

Same as required hardware specification |

Tab. 1: Hardware requirements of vRealize Automation

The appliances shown in the table are preconfigured virtual machines, which can be easily added to your vCenter Server or ESXi inventory. IaaS components are installed on a physical or virtual Windows 2008 R2 SP1, Windows 2012, or Windows 2012 R2 server.

3.4.3 Deployment architecture

As we now have finished the discussion about the hardware requirements, it’s time to address possible deployment architectures.

VMware basically differentiates between a minimal and an enterprise deployment.

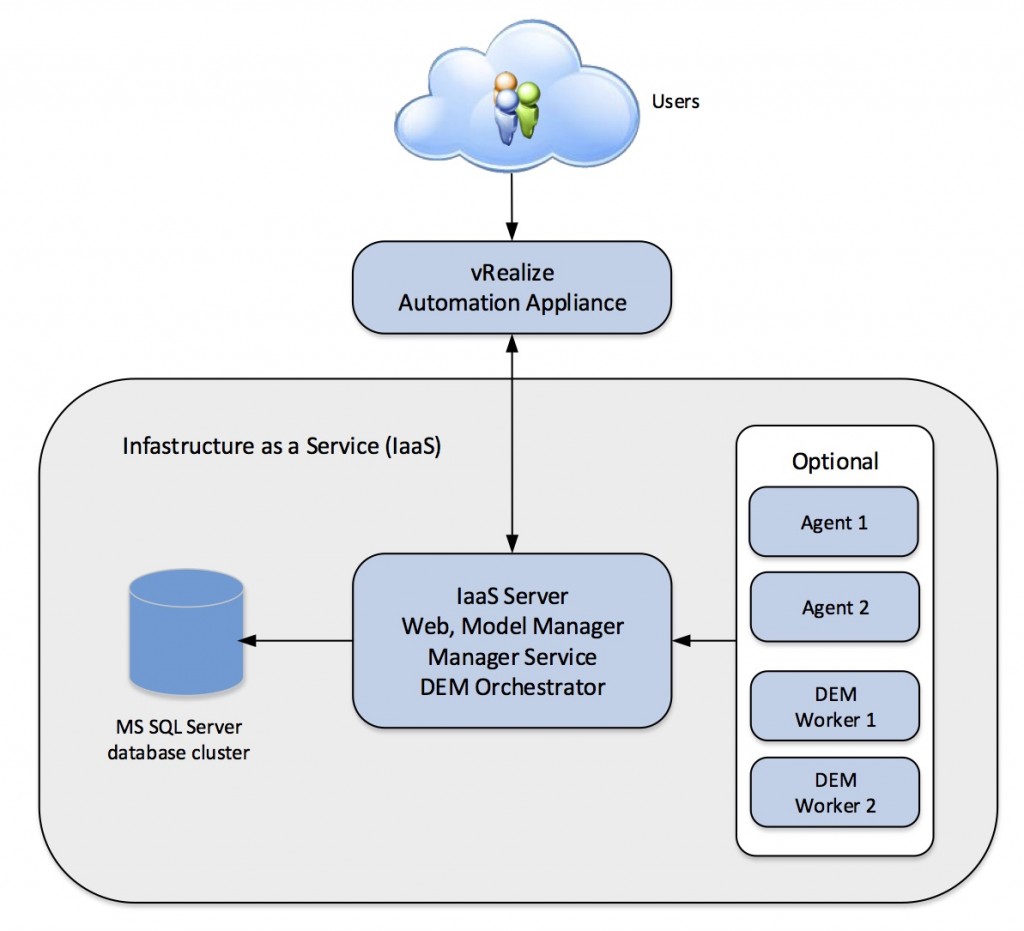

Minimal deployments

Minimal deployments are perfect for a development, a training environment or can serve as a proof of concept. They can easily be deployed – there is only one vRealize Automation appliance node and all infrastructure components are installed on a single node. The minimal deployment architecture is shown in Fig. 3. A small deployment can cope with the following workload:

- 10000 managed items

- 500 catalog items

- 10 concurrent machine provisions

| Fig. 3: Minimal deployment architecture |

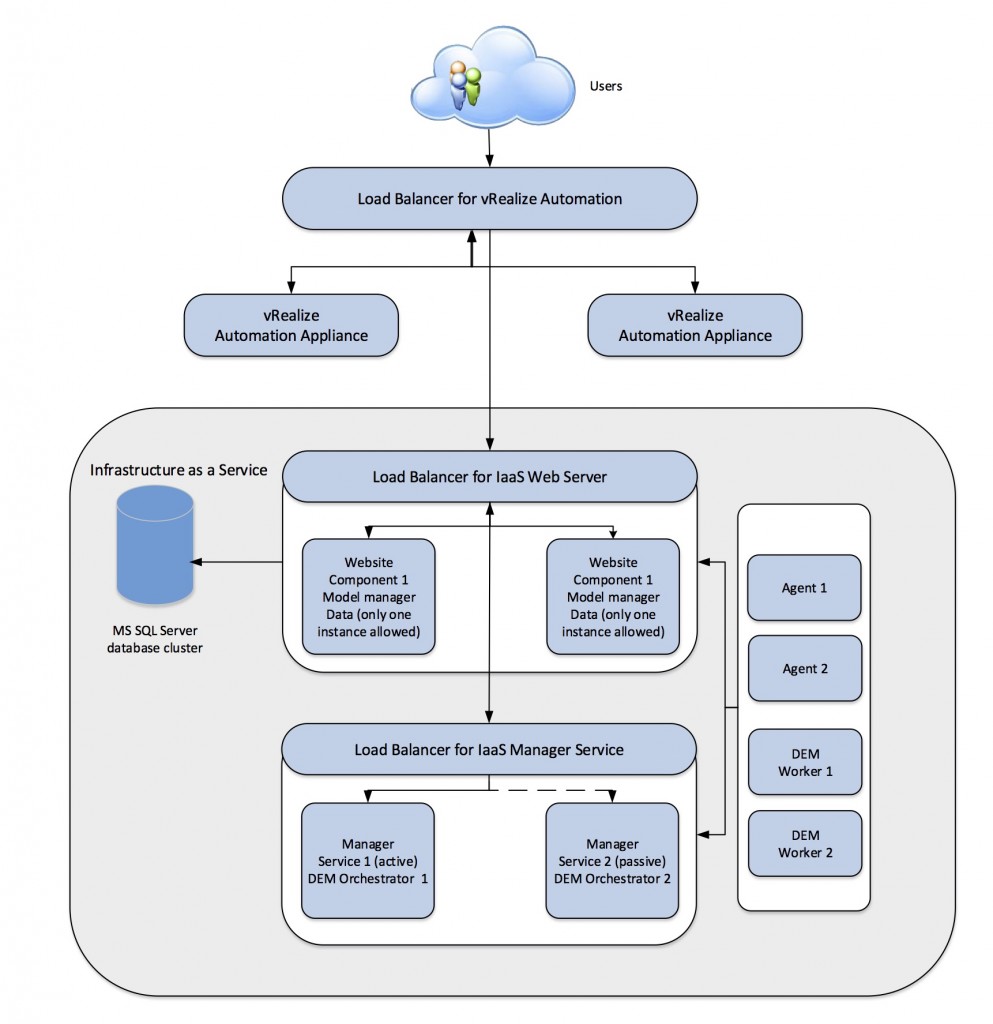

Medium and enterprise deployments

VMware recommends an enterprise deployment for production use. Depending on your company’s needs, a typical medium-sized high-availability environment consists of the following components (the values for enterprise deployments are shown in brackets):

- 2 vRealize Automation appliances

- (2 Orchestrator appliances)

- 2 IaaS Web Servers

- 2 Manager Service Server

- 1 (2) DEM Server

- 1 (2) Agent Server

- 1 clustered Microsoft SQL Server

Such a deployment can be used to support the following items:

- 50000 managed items

- 2500 catalog items

- 100 concurrent machine provisions

In order to deploy such a solution, load balancers are needed. However, vRealize Automation does not ship with any load balancer included. VMware recommends to use a NSX, F5 Big IP or Citrix Netscaler load balancer, but using other load balancers like Apache is basically also possible. The architecture itself is shown in Fig. 4. The idea behind the architecture is to increase robustness, high-availability and performance. Therefore, the different components are separated on different nodes. Some of them can be then used in an active-active cluster or scaled horizontally, while others can work only in an active-passive cluster.

| Fig. 4: Logical design for an enterprise deployment |

3.4.4 High-availability and scalability considerations

Now that we have talked about the hardware requirements, you need to decide whether or not you need a certain level of high-availability in your environment. You also need to work out how much load you want to serve with vRealize Automation. With that knowledge in mind, we can approximately figure out how many servers we need to deploy. While in test and lab environments a minimal deployment will be sufficient (with most of the IaaS components on one node), we have to scale up our environment for bigger loads. For many environments, the vSphere HA feature will be sufficient. However, if you want to minimize downtime, you have to investigate each component in vRealize Automation and figure out how HA can be guaranteed. Let’s take a look on the different components in detail:

3.4.4.1 vRealize Automation appliance

The vRealize Automation appliance supports active-active high-availability. To enable high-availability, the appliance nodes only have to be placed behind a load-balancer.

From vRealize Automation 7 onwards, the embedded vPostgres database is automatically clustered. While in older vRealize versions, a dedicated vPostgres database was used for HA, the recommended way is now to use the internal database. However, there is no automatic failover. In the event of a failure you have to promote the new master manually or write some scripts to automate that.

The same applies to the embedded Orchestrator instance – there is now built-in support for high-availability. Nevertheless, for performance reasons you can still setup different Orchestrator nodes and put them into a cluster behind a load balancer.

When accessing an active-active cluster, users should always be redirected to the very same node in subsequent requests. Therefore, the ‘sticky-session’ or the ‘session affinity’ feature should be activated on the load balancer.

3.4.4.2 Authentication Services

We have already described that with the new release of vRA, the appliance also hosts the authentication functionality. With the current release, we don’t have to worry about that anymore, because the Authentication Services are part of the vRealize Automation appliance.

3.4.4.3 IaaS components

A lot of work for configuring high-availability and scalability has to be done on the IaaS components as well. In small environments, these components are all installed on a single node. However, in bigger environments, the components have to be distributed over different nodes and each of them has to be configured for HA and scalability. Remember, we have several different components on the IaaS server:

- The IaaS web server hosts the Model Manager and the IaaS user interface (displayed as a frame from the vRA appliance).

- The Manager Service coordinates the communication between the database, LDAP or Active Directory and a SMTP Server.

- Distributed Execution Managers (DEMs) communicate with external systems to provision resources.

- Proxy agents know how to interact with hypervisors.

Keeping this knowledge in mind, we can now concentrate on these components.

IaaS Web server

The IaaS web server can be placed behind a load balancer – in the same manner as the vRealize Automation appliance – hence supporting an active-active cluster configuration. However, as no user is directly accessing the web server (only the vRA appliance), there is no need for ‘sticky session’ or ‘session affinity’. Instead the ‘Least Response Time’ or ‘round-robin’ algorithm can be used. As previously mentioned, any load balancer with these features can be used.

It is important to act with caution when installing IaaS web servers in your environment. While you can install many instances of the website component, only one node is allowed to run the Model Manager data component (usually the first node in the deployment). The other nodes will just run the website component.

There are also some performance considerations. At runtime, the IaaS web server is usually CPU-bound. So, if you notice some latency in your environment, please monitor the IaaS web servers. It is also easier to scale up your web server (add more CPUs or memory) than scaling out (add another node behind the load balancer).

Manager Service

As opposed to the aforementioned components, you cannot run the Manager Service in an active-active cluster configuration. Instead, you need a second server, which runs as a disaster recovery cold standby server. From a performance point of view, this shouldn’t be a problem, as one Manager Service can easily serve tens of thousands of managed virtual machines. The failover itself can happen manually or (better practice) via a load balancer. In this case, however, you cannot use any load balancing algorithm.

DEM Orchestrator

Similar to the Manager Service, only one instance of a DEM Orchestrator can be active at any time in a vRealize Automation environment. Therefore, an active-passive configuration is required. That being said, there is no need to configure a load balancer for the DEM Orchestrator, as DEM Orchestrators can automatically monitor themselves. When a DEM Orchestrator is started, it automatically searches for another running DEM Orchestrator instance. If none is found, it becomes the primary DEM Orchestrator. If there is already a working DEM Orchestrator, it will start as a secondary node and monitor the primary. If the primary DEM Orchestrator fails, the secondary automatically takes its place. Later, if the old primary DEM Orchestrator comes back again, it will detect that there is another instance running and switch to a secondary node.

It is also important to note that the DEM Orchestrator and the Model Manger work closely together to execute all kinds of workflows. Consequently, they should be placed near to each other and should have a high network bandwidth available for communication.

DEM Worker

The components that actually run the workflows are called DEM Workers. They should be deployed near the external resources they are communicating with. For example, if you have different datacenter locations, make sure you have a DEM Worker running in each of these locations.

DEM Workers are mainly CPU-bound, as they run the workflows. A DEM Worker can run up to 15 workflows in parallel. If the workflow queue is constantly high, consider vertical scaling first (i.e. add additional CPU and RAM). Nevertheless, you can easily add an additional DEM Worker. Further to this, you can configure the workflow scheduling, in order to run certain workflows during off-hours or to increase the interval in which they run.

If a DEM Worker becomes unavailable, the DEM Orchestrator cancels all its workflow tasks and assigns them to other available workers.

Microsoft SQL Server

The last component to consider within the software stack is the Microsoft SQL Server. As the database is a critical component, you should also think about how to increase high-availability. There are different methods for creating high-availability for Microsoft SQL Server. This could be a cold standby server, a mirrored standby server or a cluster (recommended by VMware).

vRealize Automation Business Appliance

One vRealize Business Appliance can scale up to 20000 virtual machines, in up to four different vCenter servers. When you synchronize for the very first time, it will take up to three hours to finish. Later synchronizations will take between one and two hours. Like the vRA appliance, the vRA Business Appliance can be put behind a load balancer. However, you must consider that data collection can only take place from one node of the cluster.

3.5 Summary

In this chapter, we talked about the design of a vRealize Automation cloud environment. As a cloud management platform is usually placed on top of a virtual infrastructure, the design of the underlying platform has to be considered as well – especially, when a greenfield approach is adopted. As this books only covers vRealize Automation, the underlying infrastructure design is not discussed. Instead, we have shown the different stages in the design of a vRealize Automation design along with the design deliverables that should be created. In addition, we discussed possible deployment architectures of vRealize Automation including the logical and physical design.

Recent Comments